The IAA prides itself on how students develop their “soft” skills: communication, collaboration, and professionalism. These skills ensure professional success and help us make friends along the way. However, you probably didn’t know that the IAA can help you leverage the “hard” skills of coding, statistics, and linear algebra to efficiently make a new friend in no time.

In the first week of classes, our summer practicum team made a new friend – literally.

This is TIPothy or at least a rendering of what we think TIPothy would look like. TIPothy is named after our first team assignment, the TIPS assignment. For the TIPS assignment, we read and analyzed TIPS from previous classes.

I must admit that TIPothy is not a living, breathing student enrolled at the IAA; nevertheless, he has been “learning” alongside us. TIPothy is a specific type of artificial intelligence, an “NLG” or Natural Language Generation AI. At a high level, our friend TIPothy can read a lot of text, take a moment to digest it, and then generate his own sentences.

For example, we can share some wise words with TIPothy, such as,

“The days are long, but the year is short.”

He may repeat the sentence back to us, return a shorter version of the sentence (i.e., “the year is short.”), or just repeat parts of the sentence indefinitely (i.e., “the days are long, but the days are long, but the …).

When we allow TIPothy to read, he learns new words or new ways to use the words he already knows, leading to more variety in his speech. Sometimes his responses don’t make much sense, or they may surprise us with a kernel of deep insight. But what is going on behind those neon blue eyes?

What makes TIPothy tick?

TIPothy’s “brain” is a simple Markov Chain. What’s that?

A Markov Chain is a random process that transitions between different “states.” Its defining feature is that the next state depends only on its current state.

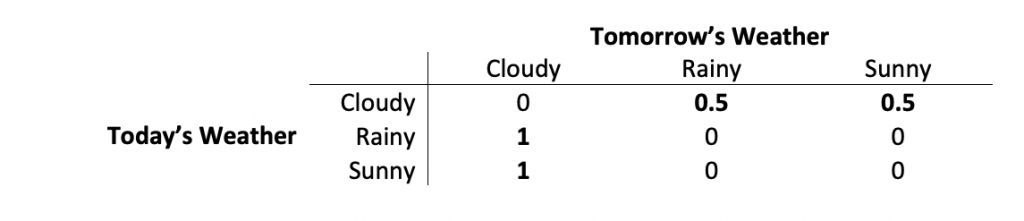

Let’s take the weather as an example. If the weather were determined by a Markov Chain, then we might define the day’s weather as either a “cloudy,” “rainy,” or “sunny” state. From one day to the next, the weather state can transition. Monday is cloudy, Tuesday is rainy, Wednesday is cloudy, Thursday is sunny, and Friday is cloudy.

| Monday | Tuesday | Wednesday | Thursday | Friday |

| Cloudy | Rainy | Cloudy | Sunny | Cloudy |

We can see that rainy and sunny days are always followed by cloudy days. Probabilistically speaking, if today is rainy or sunny, there is a 100% chance that tomorrow will be cloudy. However, we see that cloudy days can be followed by either a rainy or sunny day with equal probability. So, if today is cloudy, there is a 50% chance tomorrow will be either rainy or sunny – it’s random. But more importantly, tomorrow’s weather depends entirely on today’s weather.

We can summarize this information in a “transition matrix” like this:

If we started with a random weather state, we could generate a possible forecast for the next week, month, or year by simply feeding in today’s weather and plugging the result into the matrix to generate the next day.

This is how TIPothy sees text when he reads. A sentence, paragraph, or entire Data Column article is nothing more than a sequence of states to him. Each word or punctuation mark is a state.

TIPothy in Action

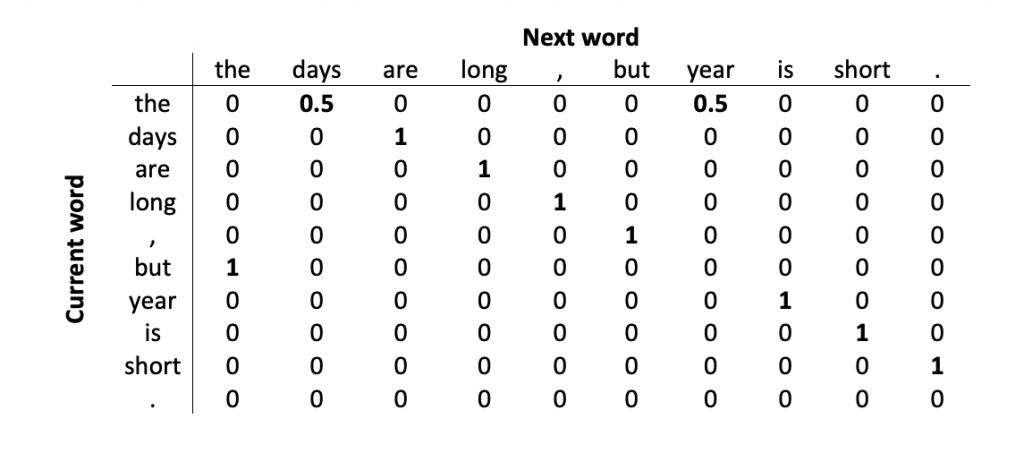

When TIPothy reads any length of text (i.e., a sequence of words and punctuation), he quickly creates his own transition matrix to summarize how frequently he sees one word follow another. From there, he uses these transition matrices to generate new sentences!

For example, if TIPothy had only read the sentence, “The days are long, but the year is short.”, then he’d process and generate text like so:

Step 1. Text Input.

We type out some text to TIPothy.

Step 2. Transition Matrix.

TIPothy converts this text to a transition matrix. Even though the word “the” appears twice in the sentence, TIPothy only needs to use a single column and row to represent that word.

Step 3. Text Generation.

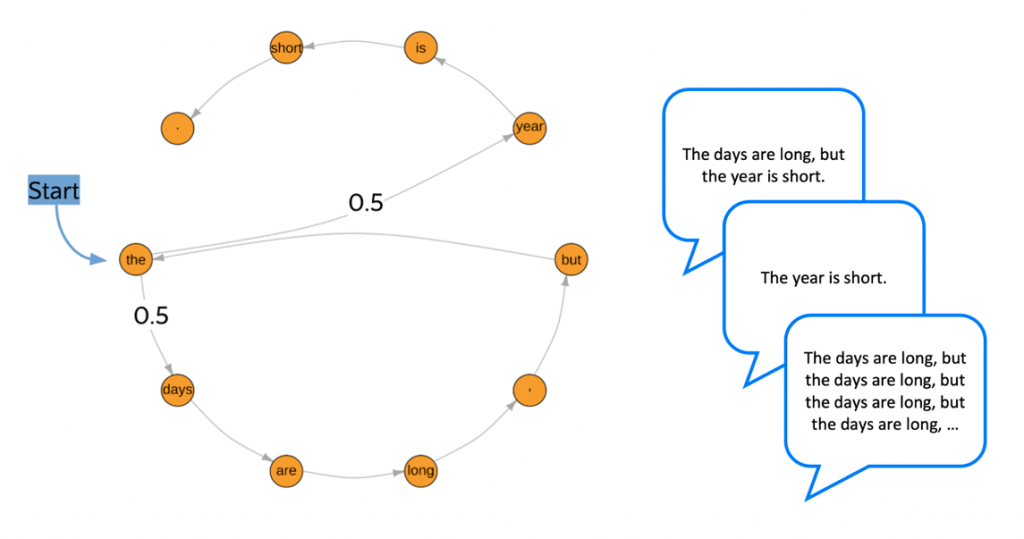

TIPothy then chooses a random word that he knows and uses his transition matrix to choose the next word. He continues in this loop until he reaches the period. Below we see the possible paths his sentence can take if he starts at the word “the.”

TIPothy, much like Michael Scott from The Office, operates with one guiding principle: he just starts a sentence without knowing where he is going but hopes to find it somewhere along the way.

The TIP of the NLG Iceberg

TIPothy is far from the cutting edge in the field of NLG. Complicated, sophisticated, mysterious cousins to TIPothy are being trained all around the world on a variety of different text sources using algorithms far beyond simple Markov Chains.

There is LaMDA, a conversational neural language model developed by Google that convinced an engineer that it had become sentient. OpenAI boasts DALL·E 2, which generates breathtaking works of art based on text prompts. DALL·E 2 created the portrait of TIPothy you saw above based entirely on the phrase, “White robot with neon blue eyes in a black business suit, digital art.” In China, WuDao 2.0 is a large language model that powers a virtual university student that can “learn continuously, compose poetry, draw pictures, and will learn to code.”

TIPothy barely scratches the surface of what I learned about text analytics, NLG, and the field of language modeling at the IAA. Yet, he’s shown himself to be a reliable friend and helpful introduction to the subject. TIPothy may be the first friend I made at the IAA, but I’m confident that he won’t be the last.

ChatGPT: The Talk of the Tech World

Since the initial draft of this article in October 2022, the world of NLG has experienced an explosion of excitement and advancements. One of the most notable examples is the virality of ChatGPT, a state-of-the-art language model developed by OpenAI that has captured the attention of users worldwide. ChatGPT has demonstrated an unprecedented ability to understand and respond to complex prompts, making it an incredibly versatile and powerful tool. Additionally, the recent unveiling of GPT-4, the successor to ChatGPT and the very model generating THIS paragraph, has taken the world by storm. Building upon its predecessors, GPT-4 offers even more advanced language capabilities and further extends the possibilities of what can be achieved with NLG. TIPothy and its more sophisticated cousins ensure that we’ll remain at the forefront of this rapidly evolving field, and we can’t wait to see what the future has in store for NLG technology.

Columnist: Stefán Ragnarsson